Create a rotating proxy crawler in Python 3

One day, a friend of mine was crawling a website (let's be honest: everybody crawls someone other) but, of course, he was banned a lot of times and he had to start Tor or find some other system. After some research, finding a complete rotating proxy system in Python was impossible, so I created my own and I want to share it with you.

The crawler will follow this flow:

1. At the very beginning, it retrieves a list of valid HTTPS proxies from sslproxies.org

At the very beginning, it retrieves a list of valid HTTPS proxies from sslproxies.org

With BeautifulSoup, it scans the table and obtains the values

1.2. With the Python module

Fake-useragent, simulates a user agent to simulate a real request

It does its stuff and calls (in this case, we use a range from 1 to 100 to test it)

Every 10 requests (you can of course increment this value), he changes proxy

If the proxy is broken, it deletes it from our list and catches another one.

Prerequisites

Our code will be compatible with Python 3.x, so make sure you have it installed. We are going to use also two external modules:

BeautifulSoup, to scrape the source. Install it with

pip3 install beautifulsoup4Fake-useragent to retrieve a random User Agent, to simulate a "real" request. Install it with

pip3 install fake-useragent. Note: This is optional. You can replace it with a "hard coded" and fixed string, it doesn't matter.

Coding a hot damn script

The first part of every language is the "inclusion" section, so we are going to include our needed libraries.

from urllib.request import Request, urlopen

from bs4 import BeautifulSoup

from fake_useragent import UserAgent

import random

Now we generate a random user agent and initialize an empty list where we'll put our proxies.

ua = UserAgent() # From here we generate a random user agent

proxies = [] # Will contain proxies [ip, port]

Since we like a structure where the most important code is at the top and the other small functions at the bottom (and in Python this is "not possible"), our code will be in a method called main which will be recalled at the end, like this:

# Main function

def main():

# Our code here

if __name__ == '__main__':

main()

Retrieve proxies

Because we want a rotating proxy system, we need to retrieve them from the beginning and we'll achieve that by scraping the site sslproxies.org with BeautifulSoup (BS from now), the most famous scraping library.

Inside our main function, we initialize a new Request, attach a User Agent to it and retrieve its contents.

# Retrieve latest proxies

proxies_req = Request('https://www.sslproxies.org/')

proxies_req.add_header('User-Agent', ua.random)

proxies_doc = urlopen(proxies_req).read().decode('utf8')

Now creating a new BS instance, we scan all the rows contained in the main table.

soup = BeautifulSoup(proxies_doc, 'html.parser')

proxies_table = soup.find(id='proxylisttable')

# Save proxies in the array

for row in proxies_table.tbody.find_all('tr'):

proxies.append({

'ip': row.find_all('td')[0].string,

'port': row.find_all('td')[1].string

})

With proxies.append, we save a new dict with the keys ip and port. At this point, all we have to do is to get a random proxy from our list. Since we need this many times, we'll put the code inside a function called random_proxy, right before the line if __name__ == '__main__':

# Retrieve a random index proxy (we need the index to delete it if not working)

def random_proxy():

return random.randint(0, len(proxies) - 1)

Note that we return the key and not the value of the proxy: why this? Because if a proxy fails, we want to remove it from the list, so we won't catch it again.

Request, request, request

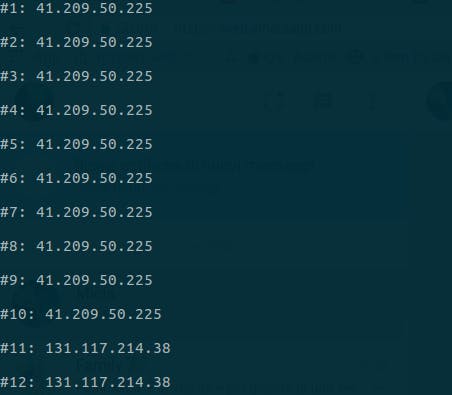

For testing purposes, we'll make 100 requests to icanhazip.com which will return our current IP (proxied, of course). Using the random_proxy method written above, we retrieve a proxy and then attach it to our request and every 10 requests we change IP.

proxy_index = random_proxy()

proxy = proxies[proxy_index]

for n in range(1, 100):

req = Request('http://icanhazip.com')

req.set_proxy(proxy['ip'] + ':' + proxy['port'], 'http')

# Every 10 requests, generate a new proxy

if n % 10 == 0:

proxy_index = random_proxy()

proxy = proxies[proxy_index]

Now with a try/catch we intercept broken proxies and delete them from our list and notice the user, or, if the request has been successful, we print what IP icanhazip sees.

# Make the call

try:

my_ip = urlopen(req).read().decode('utf8')

print('#' + str(n) + ': ' + my_ip)

except: # If error, delete this proxy and find another one

del proxies[proxy_index]

print('Proxy ' + proxy['ip'] + ':' + proxy['port'] + ' deleted.')

proxy_index = random_proxy()

proxy = proxies[proxy_index]

Save the file (in my case scrape_me.py, just a tribute to "Rape me" of Nirvana) and execute it. You'll see something like this:

Code

This is the full code or, if you want, you can see it in this Github repository.

from urllib.request import Request, urlopen

from bs4 import BeautifulSoup

from fake_useragent import UserAgent

import random

ua = UserAgent() # From here we generate a random user agent

proxies = [] # Will contain proxies [ip, port]

# Main function

def main():

# Retrieve latest proxies

proxies_req = Request('https://www.sslproxies.org/')

proxies_req.add_header('User-Agent', ua.random)

proxies_doc = urlopen(proxies_req).read().decode('utf8')

soup = BeautifulSoup(proxies_doc, 'html.parser')

proxies_table = soup.find(id='proxylisttable')

# Save proxies in the array

for row in proxies_table.tbody.find_all('tr'):

proxies.append({

'ip': row.find_all('td')[0].string,

'port': row.find_all('td')[1].string

})

# Choose a random proxy

proxy_index = random_proxy()

proxy = proxies[proxy_index]

for n in range(1, 100):

req = Request('http://icanhazip.com')

req.set_proxy(proxy['ip'] + ':' + proxy['port'], 'http')

# Every 10 requests, generate a new proxy

if n % 10 == 0:

proxy_index = random_proxy()

proxy = proxies[proxy_index]

# Make the call

try:

my_ip = urlopen(req).read().decode('utf8')

print('#' + str(n) + ': ' + my_ip)

except: # If error, delete this proxy and find another one

del proxies[proxy_index]

print('Proxy ' + proxy['ip'] + ':' + proxy['port'] + ' deleted.')

proxy_index = random_proxy()

proxy = proxies[proxy_index]

# Retrieve a random index proxy (we need the index to delete it if not working)

def random_proxy():

return random.randint(0, len(proxies) - 1)

if __name__ == '__main__':

main()

Additional resources

Take a look at this awesome article by Zach Burchill about how to scrape a lot of data in Python.